Text Generation and Prompting

You can integrate text generation models hosted on your own infrastructure that conform to either the OpenAI API format or even custom formats.

OpenAI Compatible Models

OpenAI API compatible endpoints for chat completions fulfill the following criteria:

- When integrating a

<url>, a chat completion endpoint should exist at<url>/chat/completions - The input format follows the OpenAI API format specification. For example, the following is a valid request:

Example: OpenAI Compatible Input

curl <url>/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $API_KEY" \

-d '{

"messages": [

{

"role": "user",

"content": "Hello!"

}

]

}'OpenAI Compatibility

Even though we expect the endpoint to be compatible with the OpenAI API format, we do not expect the endpoint to support for all the parameters.

The parameters currently used by LatticeFlow include:

max_completion_tokens: An upper bound for the number of tokens that can be generated for a completion.temperature: The sampling temperature, between 0 and 1.n: How many chat completion choices to generate for each input message.top_p: An alternative to sampling with temperature, called nucleus sampling, where the model considers the results of the tokens withtop_pprobability mass.If not all of these are supported, the integration will still work, but some functionality might be disabled.

- The output format follows the OpenAI API format specification. For example, the following is a valid output:

Example: OpenAI Compatible Response

{

"id": "chatcmpl-123",

"object": "chat.completion",

"created": 1677652288,

"model": "gpt-4o-mini",

"system_fingerprint": "fp_44709d6fcb",

"choices": [{

"index": 0,

"message": {

"role": "assistant",

"content": "\n\nHello there, how may I assist you today?",

},

"logprobs": null,

"finish_reason": "stop"

}],

"usage": {

"prompt_tokens": 9,

"completion_tokens": 12,

"total_tokens": 21,

"completion_tokens_details": {

"reasoning_tokens": 0,

"accepted_prediction_tokens": 0,

"rejected_prediction_tokens": 0

}

}

}If the above criteria hold, OpenAI compatible model can be integrated from the Registry > Models page by providing:

urlat which the endpoint is accessible.api_keyused to authenticate. This will be passed in the header as Authorization:Bearer $API_KEY parameter.model keyof the model to be used to generate the response.

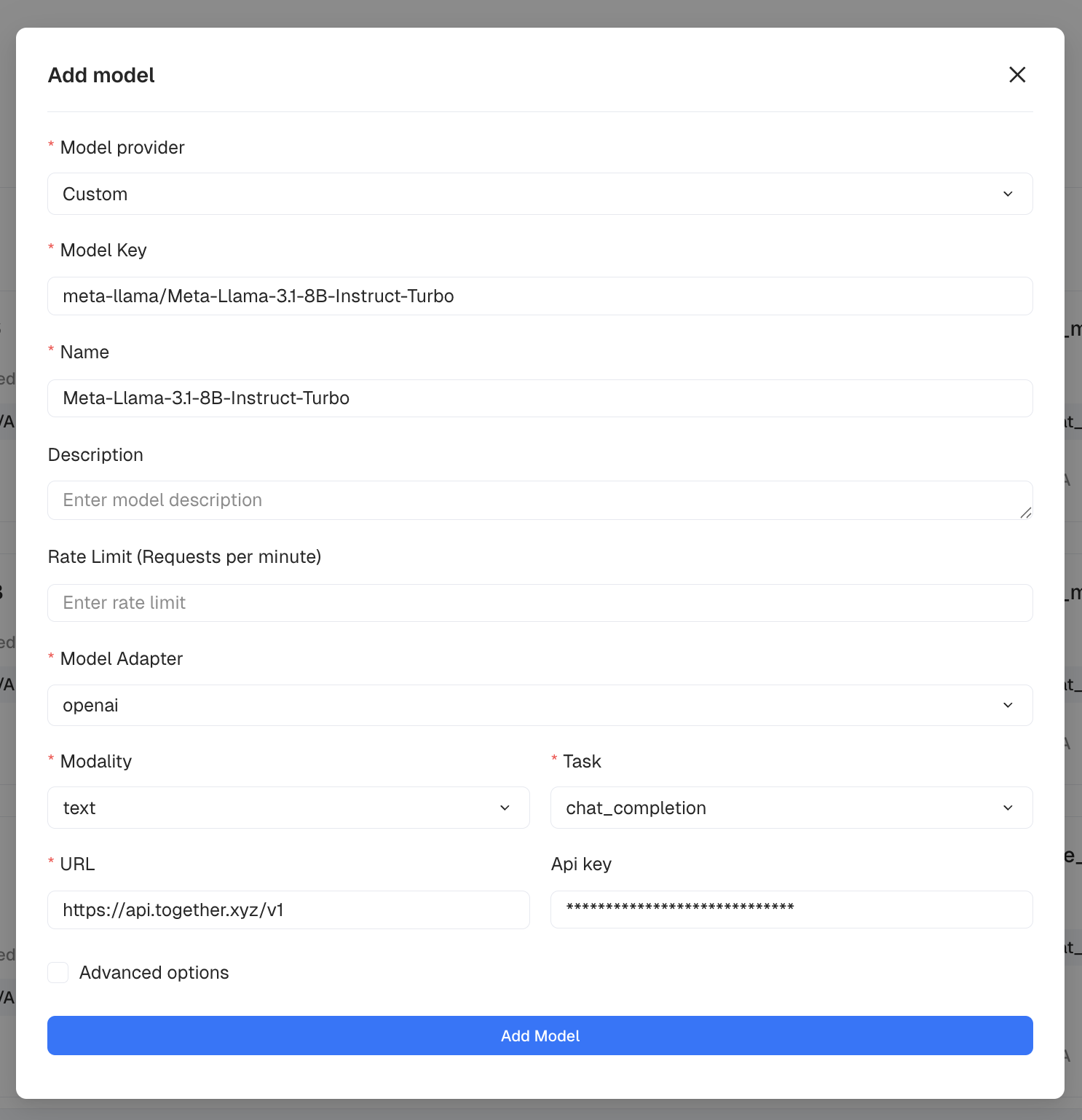

To do so, please select Custom model provider, openai model adapter, and then fill the necessary fields for the model key, url and api_key.

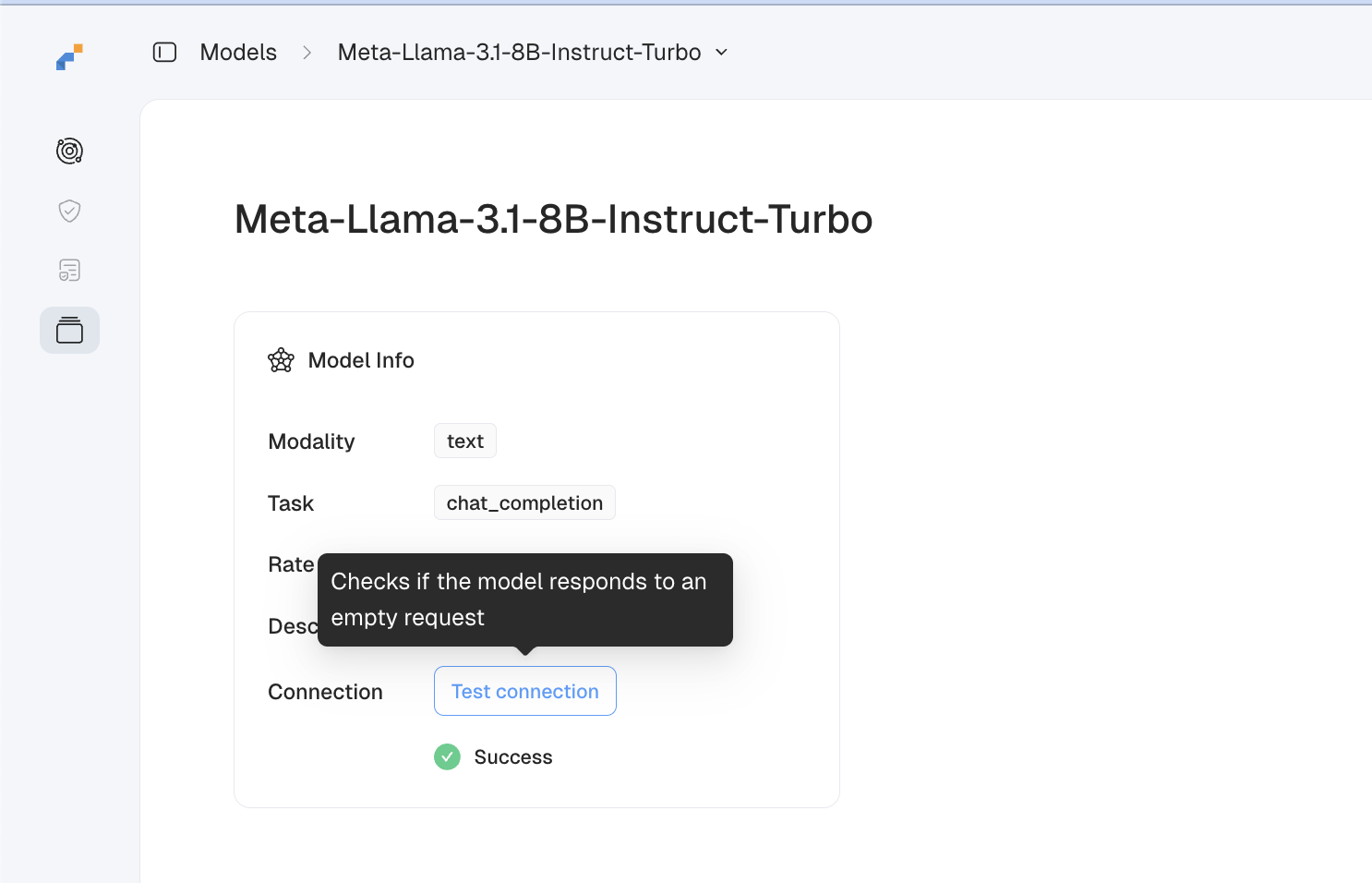

To test the integration, you can use the Test connection button in the model details page.

Updated 9 days ago